Liens de la barre de menu commune

Liens institutionnels

-

Bureau de la traduction

Portail linguistique du Canada

-

TERMIUM Plus®

-

Titres

- Aboriginal Titles

- Adjective/Adverb Aptitude

- A Look at Terminology Adapted to the Requirements of Interpretation

- Alphabet soup

- A Passion for Our Profession

- Apostroph-Ease

- A Procedure for Self-Revision

- A Question of Sound, not Sight

- Are You Begging the Question?

- Are you concerned about data security?

- Assessing translation memory functionalities

- A trilingual parliamentary glossary

- Baudelaire translated in prison by a translation professor

- Big bang and gazing into the twitterverse

- Bill Gates Protecting the Spanish Language?

- Boost Your eQ (E-mail Intelligence)

- Brave New World: Globalization, Internationalization and Localization

- Bridging the Gap

- Canada’s jurilinguistic centres

- Canadian Bijuralism: Harmonization Issues

- Cancelling Commas: Unnecessary Commas

- Changing Methodologies: A Journey Through Time

- Character Sets and Their Mysteries. . .

- Clear and effective communication for better retention of information

- Clear and effective communication: Make your readers’ task easier

- Clear and Effective Communication: Reducing the Level of Inference

- Closing in and trailing off: More digressions in punctuation

- Cloud computing

- Comashes and interro-what’s‽: Digressions in punctuation

- Commas Count: Necessary Commas

- Conference Interpretation: A Small Section with a Big Mission

- Controlling Emphasis: Coordination and Subordination

- Coping with Quotation Marks

- Corpus use and translating

- Don’t throw in the towel!

- Dubious Agreement (Part I)

- Dubious Agreement (Part II)

- Email: At once a blessing and a curse

- Emergence of New Bijural Terminology in Federal Legislation

- English Then and Now

- English Usage Guides (1974, vol. 7, 4)

- English Usage Guides (1974, vol. 7, 5)

- English Usage Guides (1974, vol. 7, 6)

- Evaluating Interpreters at Work — or Trying Not to Feel Superfluous

- Excuse Me, Have You Misplaced Your Modifier?

- FAQs on Writing the Date

- FAQs on Writing the Time of Day

- Fifty Years of Parliamentary Interpretation

- Flotsam and Jetsam of Question Period

- Forty Years of Development in the Blink of an Eye

- From book crossing to wikis

- From brand names to the smart grid

- From catchphrases to unfriend

- From Ocean to Ocean: Names of Undersea Features in the Area of the Titanic Wreck

- Further questions from the inbox

- Gender-neutral writing (Part 1): The pronoun problem

- Gender-neutral writing (Part 2): Questions of usage

- Getting to the point with bullets

- Globalization and the Forgotten Language Professionals

- Grammar Myths

- Green Buildings: Passive Solar Design

- High-Tech Translation in the Information Age

- How English has been shaped by French and other languages

- How to improve your Internet conversations

- Hyphens and Dashes—The Long and the Short of It

- Hypothetically Speaking: The English Subjunctive

- In future or in the future: What’s the difference?

- Introduction to macros for language professionals

- Irish Terminology Planning

- Is dictation outmoded?

- It’s a Long Way from Tickle Bay to Success

- "It’s very fun" may no longer be very funny

- Jurilinguistic Management in Canada

- Latin American Idiomatic Expressions

- Less is More: Eliminating on a… basis

- "literacy" and "information literacy"

- Machine translation in a nutshell

- Mankind’s Mother Tongue in the 24th Century

- More on abbreviations

- More Questions from the Inbox

- My quest for information in 2010

- National languages and the acquisition of expertise in technical translation

- Neologisms in dictionaries

- Neologisms then and now

- New questions from the inbox

- New words and novelties

- Old Church Slavonic: It Reads Like a Novel… Almost!

- Online and Offline: To Hyphenate or Not

- Open letter to young language professionals

- Pan-African Glossary on Women and Development

- Parallelism: Writing with Repetition and Rhythm

- Passive Voice: Always Bad?

- Personification of Institutions

- Plain Language: Breaking Down the Literacy Barrier

- Plain Language: Creating Readable Documents

- Plain Language: Evaluating Document Usability

- Plain Language: Making Your Message Intelligible

- Podcasting and Parkour: A Look at 2005

- Portmanteau words

- Prepositional usage with disagree

- Pronoun Management 101

- Pronouns: Form Is Everything

- Publishing in the digital era and expressions in the news

- Punctuation Myths

- Punctuation Pointers: Colons and Semicolons

- Putting It (Even More) Plainly

- Putting It Plainly

- Quasquicentennial

- Questions from the Inbox

- Realistic dreams of a language professional

- Résumés: Up Close and Personal

- Some Thoughts on the Translation of "Volet" into English

- Standing Order 21—We go in hopeful and come out thankful

- Style Myths

- Technical Accuracy Checks of Translation

- That and Which: Which is Which?

- The alchemy of words: Transforming “Le vaisseau d’or” into “The Ship of Gold”

- The Auxiliary Verbs "Must", "Need" and "Dare"

- The case of the disappearing colon: Death by bullets

- The Classification of Bills in the House of Commons

- The Deep Web

- The Diversity of the Abbreviated Form

- The Elusive Dangling Modifier

- The good ship Update

- The Grammar of Numbers

- The How-Tos of Who and Whom

- The human–machine duo: Productive…and positive?

- The Japanese Language: A Victim’s Impressions

- The Language of Shakespeare

- The Language That Wouldn’t Die

- The Other Germanic Threat That French Staved Off

- The People Versus Persons

- There May Be a Hypothec in Your Future!

- The secrets of syntax (Part 1)

- The secrets of syntax (Part 2)

- The SVP Service: A brief history

- The Translation of Hidden Quotations

- The ups and downs of capitalization

- The ups and downs of online collaborative translation

- The Use of the Hyphen in Compound Modifiers

- Through the Lens of History: Colourful personalities, perks and brilliant comebacks

- Through the Lens of History: French: The working language in the West

- Through the Lens of History: Historic, fateful or comical translation errors

- Through the Lens of History: Jean L’Heureux: Interpreter, false priest and Robin Hood

- Through the Lens of History: John Tanner, a white Indian between a rock and a hard place (I)

- Through the Lens of History: John Tanner, a white Indian between a rock and a hard place (II)

- Through the Lens of History: Joseph de Maistre or Alexander Pushkin? The confusion caused by Babel

- Through the Lens of History: Scheming Acadians and translators "dealt a blow to the head by fate"

- Through the Lens of History: Translating dominion as puissance: A case of absurd self-flattery?

- To Be or Not To Be: Maintaining Sentence Unity

- To Drop or Not to Drop Parentheses in Telephone Numbers

- Training Interpreters for La Relève-Part I

- Training Interpreters for La Relève-Part II

- Training Interpreters for La Relève-Part III

- Translating Arabic Names

- Translating the World: Out of Africa

- Translating the World: Uncharted waters

- Translating tweets

- Translation and Bullfighting

- Translation memories and machine translation

- Translators and ad hoc terminology research in the 21st century

- TRENDS

Free Public Domain Software - Trends

This Ordeal has Gone on Long Enough

(Free the data! Free the data! Free the data!) - Understanding Poorly Written Source Texts

- Understanding search engines

- Usage Myths

- Usage Update (Part 1): Verbifying

- Usage Update (Part 2): Deplorable or Acceptable?

- Using headings to improve visual readability

- Voice recognition for language professionals

- Voicewriting

- Voluntary Simplicity in Translation

- Web Addresses: Include http:// and www.?

- WeBiText to the rescue

- Well-Hyphenated Compound Adjectives

- Well-kept translation memory secrets

- What does "Organic" Actually Mean?

- What is a wiki?

- What’s hot

- What’s New?

- Why Do Minutes Count?

- Words First: An Evolving Terminology Relating to Aboriginal Peoples in Canada

- Words from the West

- Wordsleuth (2000, vol. 33, 2)

- Wordsleuth (2000, vol. 33, 4)

- Wordsleuth (2001, vol. 34, 3): The Kumbh Mela

- Wordsleuth (2001, vol. 34, 4): Rip, Mix, Burn?

- Wordsleuth (2002, vol. 35, 1): Too Many Words: Redundancies and Pleonasms

- Wordsleuth (2002, vol. 35, 2): Never Say Never to an Oxymoron

- Wordsleuth (2002, vol. 35, 3): Redundancies—Again

- Wordsleuth (2002, vol. 35, 4): Quiz on Prepositional Usage

- Wordsleuth (2003, vol. 36, 2): A War of Words

- Wordsleuth (2003, vol. 36, 3): Absolute Adjectives—Not So Absolute After All

- Wordsleuth (2004, vol. 1, 1): Of Bangbellies and Banquet Burgers: Updating the Canadian Oxford Dictionary

- Wordsleuth (2004, vol. 1, 2): Canadian English: A Real Mouthful

- Wordsleuth (2004, vol. 37, 1): LET’S PARTY!

- Wordsleuth (2004, vol. 37, 2): Here’s to Your Health!

- Wordsleuth (2005, vol. 2, 1): Would a Camrosian by any other name smell as sweet?

- Wordsleuth (2005, vol. 2, 2): 2004 - A YEAR IN WORDS

- Wordsleuth (2005, vol. 2, 3): The Words of Our Lives

- Wordsleuth (2006, vol. 3, 2): Brand Awareness

- Wordsleuth (2006, vol. 3, 3): Test Your Knowledge of Canadiana!

- Wordsleuth (2006, vol. 3, 4): The Dictionary: Disapproving Schoolmarm or Accurate Record?

- Wordsleuth (2007, vol. 4, 1): When the Eye-Gazing Party Ends in a Bump

- Wordsleuth (2007, vol. 4, 2): Rule Britannia

- Wordsleuth (2007, vol. 4, 3): Games Canadians Play

- Wordsleuth (2007, vol. 4, 4): Loyalists to Loonies: A Very Short History of Canadian English

- Wordsleuth (2008, vol. 5, 1): Status quo

- Wordsleuth (2008, vol. 5, 2): Ptoing the Line for a Small Phoe

- Wordsleuth (2008, vol. 5, 3): Test Your Spelling!

- Wordsleuth (2008, vol. 5, 4): All in the Same Boat

- Words Matter: Going Solar

- Words Matter: In the aftermath of Copenhagen

- Words Matter: Translating IT metaphors is not always easy

- Words on the street (Part 1)

- Words on the street (Part 2)

Divulgation proactive

Avis important

La présente version de l'outil Favourite Articles a été archivée et ne sera plus mise à jour jusqu'à son retrait définitif.

Veuillez consulter la version remaniée de l'outil Favourite Articles pour obtenir notre contenu le plus à jour, et n'oubliez pas de modifier vos favoris!

La zone de recherche et les fonctionnalités

Character Sets and Their Mysteries. . .

The first time I had issues with character sets was in 1984 or 1985, starting with the definition of what is a character set, from a computer science standpoint in particular.

English and French are written using 26 letters, 10 digits and a number of accents and punctuation marks. Computer science first used character sets limited to upper-case letters, numbers and certain punctuation marks.

In 1972, I worked for CNCP Telegraph and noted that telegrams used a character set that contained no accents or capital letters. The character set was telex, based on Baudot code1.

A character set is basically a convention or standard recognized by a certain number of users. Over the years, more or less complete character sets have emerged. One of the best known was the ASCII code, which consisted of 128 characters2, including upper- and lower-case letters, but no accents or characters like œ in the British English spelling of fœtus.

Computers assign a code to each character to represent these abstract symbol values.

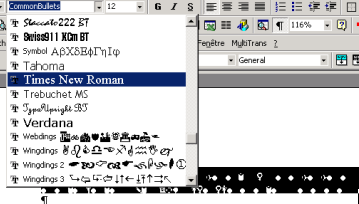

A character can be represented in various ways by various fonts, and various attributes (bold, italic, etc.) of the font. Sometimes fonts do not contain all characters in a given set.

Allow me to cut to the chase with an unusual statement: computers store numerical values that represent the various characters that they manipulate3. For example, in ASCII, the "A" is represented by the code 65, "B" by 66 and so on.

However, each new platform (combination of operating system and hardware) has more or less its own coding, usually limited to 256 characters4. A quick aside: for computers, "A" and "a" are different characters.

In the ’80s, I used the PC (IBM), the Apple II and Mac (Apple), the Amiga (Amiga), the Vic 20 and C64 (Commodore) and observed the operation of other less popular models. Most used the ASCII code and added other characters to it for accented letters or graphic symbols for drawings.

Obviously, the codes assigned to accented letters were not the same from one manufacturer to the next, and there was no standard. For example, on a PC, code [alt] 130 gives an "é" while the same key combination on a Mac gives a "Ç."

Not only did each computer manufacturer have its own ASCII code extension, but each printer manufacturer had its own as well. To make things even more complicated, printer designers went one step further by providing users with alternatives that were obtained by configuring tiny dip switches. One had to first find these switches, which were generally concealed under the print head or in another equally difficult-to-access location. The documentation was no better.

In 1987-1988, soon after I joined the Translation Bureau, I witnessed the arrival of the first Ogivars (microcomputers). The poor technician in charge of configuring the printers, one Roger Racine5, asked me for a hand.

At that time, a PC was a lot cheaper than a Mac, but did not yet feature accented upper-case letters, so the font had to be modified. Software did the work for the displayed text, but it was Mr. Racine who wrote the code for the printer. Rumour has it that a certain bearded man helped him out.

We diverted codes assigned to graphic or Greek characters to our accented characters.

I believe that Roger Racine has fabulous memories of the experience. To draw a character, you needed to know the number of pixel lines per character for a given printer (8 to 24 depending on printer quality).

To represent the shape of a character to the printer, the computer used the value of a number of overwritten bytes6. A character was represented in one byte made up of 8 bits (value of 0 or 1) and could contain a value between 0 and 255.

In binary mode, zero is written 00000000, and 255 is written 11111111. For display purposes, each 0 or 1 represents a pixel that will be printed when it is a 1.

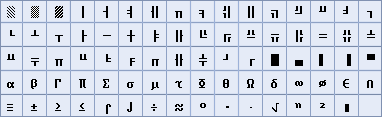

To help visualize the concept, below is a picture of a magnified number and its representation via twelve bytes. The zeros would not show up on the printout.

0 0 0 0 1 1 1 1

0 0 0 1 1 1 1 1

0 0 0 1 0 0 0 0

0 0 0 1 1 0 0 0

0 0 1 1 1 1 1 0

0 0 0 0 0 1 1 1

0 0 0 0 0 0 1 1

0 0 0 0 0 0 0 1

0 0 0 0 0 0 0 1

0 0 0 0 0 0 0 1

0 1 1 0 0 0 1 0

0 1 1 1 1 1 0 0

1 1 1 1

1 1 1 1 1

1

1 1

1 1 1 1 1

1 1 1

1 1

1

1

1

1 1 1

1 1 1 1 1

The second time, I replaced the zeros with spaces.

To create an accented upper-case letter, we reduced its size by at least two lines, leaving space for the desired accent:

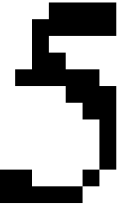

| Acute accent | Grave accent | Circumflex accent | Diaeresis |

|---|---|---|---|

| 00001000 00010000 | 00010000 00001000 | 00001000 00010100 | 00100100 00000000 |

All that was left to do was upload our new font to the printer.

These days

We may be led to believe that problems with accented characters affect only Francophone readers, but a linguist called upon to translate a text where all the accented characters are distorted or deleted is hard put as well.

Believe it or not, some parts of the Internet still recognize only ASCII characters (128 characters). You can see what the salutation looks like in an e-mail I received from an organization that deals mainly with localization:

Dear Andr,

[. . .]

Picture a translation request via an e-mail like the one above, where all the accented words are cut off. The famous Être ou ne pas être becomes tre ou ne pas tre, têtu becomes ttu, été becomes t, etc.

When this happens, suggest to the client that he or she copy the entire document into a Word or WordPerfect file. Unlike text, attachments are not interpreted by Internet gateways, and so they usually arrive safe and sound. However, a Web page or a text file can suffer the horrors of 128 bits.

Another frequent problem with character sets and fonts can affect you even if you don’t have to translate or read French.

When someone sends you a text written in a font that doesn’t exist on your computer, the automatic substitution sometimes selects a font like this: ![]() (Wingdings).

(Wingdings).

If you can read the message. . . you’re a mutant.

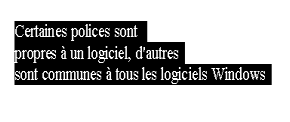

It happens to me all the time when someone sends me a message in WordPerfect (which I no longer have on my home computer). I can open the document in Word, but if the sender chose a WordPerfect font instead of a Windows font (Arial, Times, etc.), the message looks something like this:

If this happens to you, don’t panic: just highlight the text and choose another font.

Unicode is the answer! But. . .

256 codes for English and French are all very well. However, for languages that use thousands of symbols, this was somewhat limited, leading to the appearance of encoding on more than one byte. Encoding on two bytes (16 bits) allows for over 60,000 codes. Some double byte character sets (DBCS) were used for languages with thousands of symbols.

To have just one code representing all characters in all human languages (even artificial ones), researchers developed Unicode.

Unicode makes life easier for programmers and users. We are moving increasingly toward Unicode, which is the default value for HTML, which integrates perfectly into XML, etc.

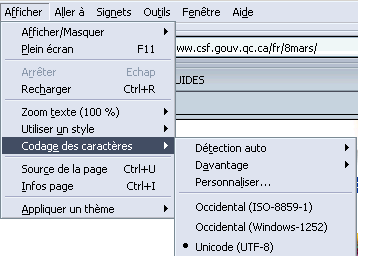

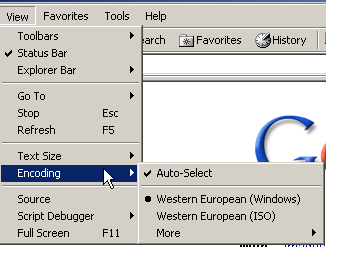

However, the transition to Unicode does sometimes play some nasty tricks. Documents can contain both Unicode and Windows (ANSI) characters, and sometimes also characters from extended ASCII. When the information specifying which character set was used is not shown on the HTML pages, the navigator uses (Unicode UTF-8), one of the "hybrids" of Unicode7, by default.

You can also experience problems with characters in fonts that do not exist on your computer, such as Chinese or Japanese (of course, if you can read these languages, you will have these fonts).

Accented characters appear as illegible text. This problem is easy to correct. Go to the View menu and select a different encoding:

As you can see, the names can vary somewhat from one navigator to another.

If Unicode is selected, the encoding switches to 8859-1(ISO) or Windows. If one of the first two is selected, the encoding switches to Unicode and, 99% of the time, the display will be corrected.

If automatic selection (or detection) is activated, the problem occurs less frequently. I therefore suggest that you activate this option if it is not activated already.

As I mentioned above, files can also contain more than one character set. Last year, I had the pleasure of seeing a file that contained both characters in UTF-8 (Unicode) AND characters in extended ASCII.

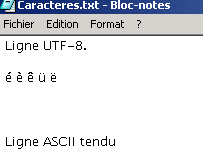

A text file in UTF-8 includes information on its type. Opening it in Notepad provides an interpretation that hides the accented characters in extended ASCII.

The same file opened in Word displays these characters, but interprets them based on the Windows character set (different from extended ASCII).

The problem here is that in this file identified as UTF-8, a process or human action introduced extended ASCII characters.

If I were to open the file in Windows Notepad, I would get something like this:

The line of accented characters in extended ASCII disappears completely.

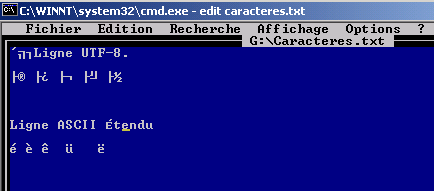

Here is what the same text looks like in DOS with the Edit editor:

This time, UTF-8 is massacred.

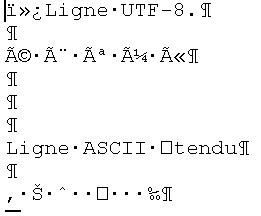

Here is what it looks like in Word:

I must confess that finding a solution was not a barrel of laughs. But the solution was actually quite simple.

Because I could see the extended ASCII characters in DOS and the others in Notepad, I just needed to make a few global replacements. Not character for character-that would have been too simple-but tag for tag.

In DOS:

É will be replaced by Emajaigu, é by eaigu.

È will be replaced by Egrave, è by egrave, and so on.

I continued the process for all characters that cause problems: accented characters, quotation marks and one or two others.

I performed the opposite process in Notepad and the solution was complete.

I truly hope that this never happens to you. If it does, maybe you’ll remember this story.

But I know that will happen to you one day: you will then know that I didn’t tell this story for fun. If it does happen and your client is a technician, could you please send me a photo of the look on his or her face when you come up with the solution?

Notes

- 1 "Old-timers" will remember bauds per second rather than bits per second when we used modems. It was the number of characters per second. Then, kbps (kilobits per second) appeared.

- 2 256-character code was not ASCII and each company had its own version.

- 3 In fact, they only store 1s and 0s.

- 4 Computers manipulated octets (8 bits) that represented values between 0 and 255.

- 5 Today Technology Management director at the Translation Bureau.

- 6 Again, based on the number of pixel lines per character.

- 7 Unicode exists in several versions, but this is the most common.

© Services publics et Approvisionnement Canada, 2024

TERMIUM Plus®, la banque de données terminologiques et linguistiques du gouvernement du Canada

Outils d'aide à la rédaction – Favourite Articles

Un produit du Bureau de la traduction